View source for User talk:Chase-san

Archive: 2014 and older

- [View source↑]

- [History↑]

Contents

| Thread title | Replies | Last modified |

|---|---|---|

| broken links | 1 | 21:03, 29 September 2017 |

| Wiki spam | 4 | 03:34, 25 August 2017 |

| New User Flood | 29 | 14:33, 12 October 2014 |

| Hey guys, can I... | 9 | 15:03, 27 February 2013 |

| Approximate ELO | 32 | 07:05, 14 December 2012 |

Hi mate,

Links pointing to http://file.csdgn.org are broken. I fixed one of yours in 1on1 to point to http://robocode-archive.strangeautomata.com/robots/ but I presume that you would rather host your bot yourself.

Thank you for dealing with the recent spam attack.

Most of the discussion about this attack is happening at Thread:User talk:Skilgannon/Spam bot invasion, including a list of users who need to be nuked and some suggestions about what to do next. If you haven't read that thread yet, you should.

Already saw that page and i'm working on what I can. I don't have system access, just admin, so I can't change any logic or lock the wiki to clean up or anything, just going to begin a super ban wave.

Abdul Abhi2828 Abhishek.upadhyay11 Adamsmith Ahmad1234 Ahmadd123 Ajaykapadia2 Akki Alex Alexa Alexdogge1 Alexyokei Alhaji Amit8923 Andoralifs Andrusmith1 Anisingh Anjilajolia Anjilajoliaa ANNU17 Anshu01 Araja Asdasd Ashish12345 Ashish786786 Astrologerbaba16 Avisupport Baba786 Bisafepa

Have been blocked and their pages deleted. I'll keep you updated but this is a huge list and I have work tomorrow. :(

Bitcoinsssupport Blizzardbluee Bookentry Bradyswan Brandi84 Bujelizala Caira Caleb Cbkjvjvkd Chorsupport Chunnu7294383gulabo Cooljass1 Cuwerokume Cybertechnisdhhdh Dabbu Dante Dasfgs Dear Depih12 Dev123 Dexter6789 Dfasffasf Dfgvfgvfsd5 Dfjhfgj Dfsdf Dgdfhfgjhfgh Dhanraj99 Dinni999 Dk923478 Dolka01 Dpkapadia1 Dsgdfhg Dsgsdhjfe Dykywipy Ellis S Skinner Emma000001 Emmawatson Emmie2680 Engineer123 Ergugfdguf Eswt Faer Fairsearches.pradeep Faisal Faulkner Fdbhdf525 Fibarip Frankgel Fransis99 Fullsupport Gakllerjohn Gattu Ghfdhfg Ghufgt567121 Gibson Ginjuilinhj Gk707027 Gofyozilto Gopalamj Gtrtgum Guwogepome Hajsfdjorkgpgk12 Harryji Hemantvar Herry9 Ginjuilinhj

Have been blocked and their pages deleted. This is only a 3rd of the "new list" so far and i'm already pooped. We need a better solution.

List updated.

I made a new pass through Special:ActiveUsers (when a user is nuked, it will drop out of this list), checked each one to make sure that it is indeed a spam account, and used a script to filter out the ones that you already removed.

There seems to be a flood of new users, I can only guess that these are not people interested in robocode, but rather some kind of spam bot (or spam bots). We need some way to captcha user creation I think.

While we are at it, remove some of those "new users" without any edits.

Well a few, for example I don't have access to actual site stuff (so I can't add a captcha).

We could just go through and manually delete them all, but there is no bulk method way to do that (that I know of).

You do not have permission to edit this page, for the following reasons:

You can view and copy the source of this page.

Return to Thread:User talk:Chase-san/New User Flood/reply (5).

For future reference, you can see who's a wiki administrator or bureaucrat by using the "Group:" filter on the user list page.

Hate to break it to you Chase, but we already have a captcha on user creation. In fact, we have *TWO* captchas (reCaptcha, plus a simple math question presented as an image) required for user creation, and the bots involved in these user creations seem to crack crack both. I tend to wonder if they're using a "mechanical turk" type of system to outsource bulk captcha breaking to humans.

Only reason they very rarely succeed at actually posting content these days, is because of a custom Mediawiki extension I added, which blocks any edits which add new external URL links if the user account was created within X hours of the attempt. (Note, it does not block external URLs that are not formatted as Mediawiki links, such as the participants page of course)

Perhaps I should augment this extension to remove users whose only attempted edits during a 1 week period were blocked in this fashion?

My experience with captcha locked registration, is that at some point someone, most likely a human, provides an answer to at least one question. After this bots will register like crazy. On my site I had non googlable question, which holds bots for a month or two, but sooner or letter they will come.

The only way to deal with it, is to remove old questions and generate new ones.

"Perhaps I should augment this extension to remove users whose only attempted edits during a 1 week period were blocked in this fashion?"

That policy sounds pretty reasonable, as long as it is clearly written somewhere new users would see.

Make it 3 days and that'll do it for me. We should also nuke users without any posts that registered more then 3 days ago as well (and were not added by an admin), or something.

I think there are some features enabled for registered users. Something with cookies but I cannot recall what are they. So, quiet registered users have a right to exist.

But quite folks do not contribute, so it is probably fine to sweep them away as the bot candidates.

Actually, now that I think about it. I think Voidious used Asirra to prevent issues on the berrybots wiki. Now asirra is closing down this year, so we can't use that. But there should be some other image based captcha's around.

As we all know, classification is a very difficult AI problem, but is almost trivial for us humans. :)

Usually, it is sufficient to ask what is "2+2", may be in the form "two plus two" so it is not that obvious for a parser. Since, we are fighting attacks not designed against this particular wiki, it will be sufficient. Once, a traitor give the answer to this question to a bot net, we will ask what is 2+3, and so on.

I decided to try the registration process. We do have a math question and a number image recognition images. But these bots are advanced. They clearly can parse/recognize numbers and do simple math with them.

I think we need at least one captcha which deals with something but numbers and we need it asap. My rss feed is spammed by new registration announcements way more often than I wish to know.

Or, as Rednaxela noted above, they could just be outsourcing it to humans. Though if they are advanced bots, we could try something like Asirra, which makes users select only photos which have a certain type of animal in them, though it seems Asirra itself won't be around for much longer. (Voidious, since you use Asirra for the BerryBots wiki, you may also want to look into a new captcha system.)

We might want to consider locking registration until we can come up with a solution. The wiki isn't particularly active at the moment and there has been 166 registrations since they started on September 23 (and 1 which is questionable). None of them have posted a single thing. That averages about to around 10-11 new users a day.

I guess that would be fine for a few days, but if someone's willing to go to that trouble, why not just implement one of the solutions that have already been proposed, such as deleting accounts that only post with external links in their first few days, or your suggestion to have a hidden textbox that must be left blank?

Now that I had some time to think on the problem. I do remember using one really simple and really effective tool to prevent bots from registering.

It's called a reverse captcha. Basically you use in combination with normal captcha, but you are suppose to leave it blank. Give it a id and name like "captcha" and bots will almost always fill it in with something. You then either hide it via css (most bots don't read css, and even if they do, it often falls into the machine vision problem), or write next to it that you are not suppose to fill it in.

Also prevent registration from non-local referrers should also reduce the amount of bot registrations.

There is also the twobox captcha. Where you have one set of instructions for two text boxes, that have a value already set. You tell the user to change the value of one, but not the other (usually something simple). This requires usually a custom bot to attack the site, since such a captcha is beyond the normal (which generally only attack standard captcha implementations, such as recaptcha).

Hey guys can I get enough permissions not to have to go through all the captcha to link externally, etc. I think you are fairly certain by now I am not going to spam the wiki to death right?

I mean I am probably not the smartest robocoder by any stretch of imagination, and I often end up with my foot in my mouth. But I generally mean well.

Done. And while I was at it, I added a few more as well, people who have proved they can make meaningful contributions and delete spam when they see it. If I've forgotten anybody just yell!

Sorry, I didn't realize life was so littered with captchas for the non-admins. =) Maybe we can lighten the settings a bit for registered users? Seems like we get a steady amount of wiki spam that we just have to delete/block. All that captcha suffering may be for naught.

Not sure of a good way to go about fixing the problem. We could always switch to using KittenAuth. Asirra is a slightly newer MS based variant of the same type of captcha.

Hi,

I realize that I am making a bold request considering I have only been a member for a little over a month, but I would greatly appreciate having my status upgraded to administrator.

In addition to the external link issue, it seems there has been a flood of spam recently and I would like to help.

Thanks.

Hey all. It's a bit late, but I've taken some measures to both lighten the settings for registered users, and also reduce the amount of spam.

- Adding external URLs to a page no longer triggers the captcha (registering an account still triggers the captcha)

- Adding an external URL to a page is outright disallowed until 24 hours after the account's first edit.

Hopefully that helps.

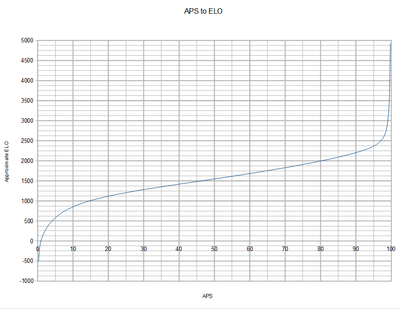

I have been mulling around ways to approximate ELO for awhile. Since originally it was used for ranking (2000 club, etc), however the rankings have drifted considerably and with the advent of APS there is no real need for it. However the number is still nice, even if the methods for calculating it have problems.

So I figured we could get our nice number without a lot of number crunching with way of a simple equation. At least that was the original theory. However APS doesn't map very well to ELO, which is not at all linear, and the data set required for calculating such an equation is incomplete.

However here are my token efforts at doing just that. The dataset I used was the 2009 Glinko-2 and APS, as it was likely the most similar to the old ELO rankings. I would ahve used those, but they lacked an APS column, and the common bots between them don't exactly line up very well (plus that decimates my data set even more).

public static final double calculateElo(double x) {

double a = 169.1;

double b = 0.02369;

double c = 334.2;

return a*Math.cosh(b*x)+c*Math.log(x);

}

public static final double calculateEloFast(double x) {

double a = 0.7082e-03;

double b = -0.3340e-05;

double c = 0.3992e-02;

return 1.0/(a+b*x+c/x);

}The first is a bit more accurate. 0 is negative infinity, and 100 is around 2450 (it should be positive infinity, but I did what I could). However with a logarithm and a cosh, it is a bit heavy to be called 700+ times every a page loads (I think at least). The second is a slightly less accurate system, 0 is 0 and 100 is about 2415. With mostly simple math it is much easier to execute.

So thoughts, concerns, scorn?

For a precise ELO calculation you would need the full pairwise matrix. But for an approximation based on an APS column, it looks good.

Another way to deal with rating drift (without the full pairwise matrix) is calculating the average of all ELO ratings, then calculate the difference from the average to 1600, then add/subtract the difference to all drifted ratings. So you have ratings centered around 1600. Works with both ELO and Glicko-2 ratings columns.

Does it currently do that? The Glinko-2 has drifted up, the 2000 club now consists of only the top 16 bots.

Which is why I was considering trying to make a Approximate version. However, with mine instead of drifting down I think I see higher bots drifting up, and lower bots drifting even more down. I think this is because as new lower bots are added the APS for higher robots go up, and robots that did worse against it go down.

So it faces an entirely different kind of drift, but at least the center seems stable.

ELO/Glicko-2 work with differences between ratings.

The more competitors the ranking has, the more the rating difference between first and last places will be. This is normal.

But ELO has another drifting problem due to deflation. All competitors ratings going down because most retired competitors have ratings above the average.

static final double DRIFTED_ELO_AVERAGE = -2397.92418506835;

static final double DRIFTED_GLICKO2_AVERAGE = 1468.99474237645;

static final double DESIRED_AVERAGE = 1600;

static double calculateElo(double driftedElo) {

return driftedElo - DRIFTED_ELO_AVERAGE + DESIRED_AVERAGE;

}

static double calculateGlicko2(double driftedGlicko2) {

return driftedGlicko2 - DRIFTED_GLICKO2_AVERAGE + DESIRED_AVERAGE;

}I'd be fine with a new ELO formula to replace the currently useless one, but I can't see myself ever again caring about ELO or Glicko over straight APS for the main overall score rating. APS is clear, stable, accurate and meaningful, and ELO/Glicko just seem like attempts to solve a problem we don't have. As far as new permanent scoring methods, I'm much more interested in the Condorcet / Schulze / APW ideas brought up in the discussions on Talk:Offline batch ELO rating system, Talk:King maker, and Talk:Darkcanuck/RRServer/Ratings#Schulze_.26_Tideman_Condorcet. I also really like what Skilgannon did with ANPP in LiteRumble, where 0 is the worst score against a bot and 100 is the best score against a bot, scaling linearly.

The main advantage of ELO/Glicko-2 over other systems is they work well with incomplete pairings, and yes, we almost don´t have problems related to incomplete pairings.

But there are other smaller advantages, like higher accuracy with scores near 0% or 100% due to its S shaped (logistic distribution) function. Being able to "forget" the past also makes ELO/Glicko-2 adequate in meleerumble where historical contamination is an issue.

And I would really like to see a Ranked Pairs ranking. This would bring something new to the rumble. It is superior to the current PL (Copeland) system in almost every way, except maybe CPU/memory performance. I was thinking in building another RoboRumble server myself, designed specifically to calculate complex rankings like Ranked Pairs. But didn´t find the time to do it yet.

The thing about ranked pairs is that I haven't seen any which provide absolute scores per bot, rather than relative scores - I guess this is just part of the definition? Because of this there isn't any way to make partial improvements however, which makes it meaningless as a testing metric. Also, they are usually difficult to understand (conceptually), so understanding which pairing is causing a score loss is harder. That's why I like the Win% and Vote% as metrics for robustness, and APS for overall strength, because they are easy to understand, calculate and identify problem bots.

In Ranked Pairs, competitors causing problems are always above in the ranking, with the one causing most problems almost always directly above. Competitors below in the ranking never cause problems.

Unless you are competing for the bottom, when you reverse the logic. Competitors causing problems are always below, and competitors above never cause problems.

Although the calculation is complex, the resulting ranking follows a rather straighforward transitive logic. Winners staying above losers, with this always being true between adjacent competitors in the ranking.

This is due to 3 criteria being met at the same time, ISDA, LIIA and cloneproof.

But Ranked Pairs is not meant to replace APS or ELO, it is meant to replace PL (Copeland). APS and ELO give a rating which helps track improvement while Ranked Pairs and Copeland don´t.

I think if you add a d/(100-x) term it will improve the fit near the top. Perhaps use d*(100-x)^e for the slow/accurate version, although you can't use simple linear algebra to find the best e then, a genetic approach would probably be better.

I like the idea of this, just for historical reasons to have a decent mapping of APS to ELO, and would consider adding a column in the LiteRumble page for it. I would calculate this on the fly as the page loads, since for me with Python on App Engine the slow part of the code is the interpretation of the code, not the individual math operations the code calls =)Of course I'd have to test to make sure it doesn't slow it down too much, but it should be fine, the fast version if nothing else.

Ah good idea, how about this? It is a real mess at the moment. But it seems to work, and is a bit more accurate in my opinion in placing certain bots where they belong. It also has negative and positive infinity for 0 and 100.

public static final double calculateApproxElo(double x) {

double a = 0.0007082;

double b = -0.00000334;

double c = 0.003992;

double d = -124.3;

double e = 647.4;

double f = -72.5;

return 1.0/(a+b*x+c/x) + d/x + e/(100-x) + f;

}

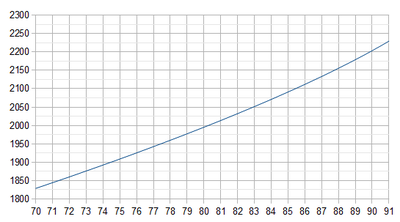

That is, Diamond and DrussGT are in the 2200 (only just), Phoenix up are in the 2100, Help and up are 2000. The 1900's start around Raiko/Lacrimas.

If you want to enforce a specific rating for a specific competitor, you can also adjust the DESIRED_AVERAGE in the algorithm above so ratings match what you want.

If you change the rating difference between competitors, you distort expected score estimation, PBI and specialization indexes. It is no longer ELO-like.

That´s why the code I put above shifts all competitors up or down equally.

Looks pretty good. Any chance you could show that first graph overlayed with a scatter plot of the training samples you used?

The first one was generated (calcelofast). I used all samples from 2009 rumble archive aps and glinko-2.

From there it was hand tuned to be honest.

Wow, ok. It would only be a couple lines of Matlab/Scilab to do a proper regression though. Wouldn't that be better?

Try this: elo(APS) = -300*ln(100/APS - 1) + 1600

It uses a reflected normal sigmoid so (I think) it's based off of the same theory that the ELO is. Here's a pretty graph =) It's properly centered at 1600 as well, unlike our glicko2 implementation which it seems has drifted - 50APS doesn't line up with 1600 like it used to (perhaps due to more strong bots re-submitted like MN was saying).

Hey that's not bad! It places some of the top 20 a bit high, and that bugs me a little, but every other bot fits a bit better.

My biggest worry is it places YersiniaPestis and up in the 2100's and Tomcat is very close to the 2200 (not really a problem), and the top 2 are over halfway to the 2300s (not a problem really, since they are in their own little universe anyway).

Traditionally the 2100's started around Phoenix (85.78), but Shadow (84.69) up would be perfectly fine.

-290*ln(100/APS - 1) + 1600

Might be a better compromise.

If you take a look here it seems that the APS has actually drifted as well.

The same version of Dookious, 1.573c had APS 85.65 on 2011/03/14, currently at 86.31. I can only conclude that lots of weaker bots must have been added to the rumble. I based that formula off of the old APS-->glicko2 relationship, and if the current APS has drifted due to weak bots being added I'm not sure if we should correct for that in our formula or not. It's a tough call.

While there is no simple way to take care that drift in the long run (that I can think of), I think we should correct it as much as we can at the moment. At least to a point where the current historical numbers have some meaning again (2000 club, etc). So at least people can claim their titles.

I would like to see a way to make the approximate elo a bit more drift tolerant, but I have no (good) suggestions on how to go about doing that.

We could just retire the clubs and make new APS ones. I'm as proud of 90 APS as any of my ELO club memberships. :-)

Yeah, considering that 80% lines up pretty well with what 2000 used to be I think this is a pretty good idea. Good luck with getting your power back, hope you didn't lose anything to Sandy =)

APS has no direct relationship to ELO. The APS can go up because, as you said, a lot of weak bots were added. For ELO not the pure result counts, but the result relative to the 'expected' result. If f.e. the expected result is 95-5, and the true result is too, ELO would not change, while APS will go up. For me, an ELO of 2000 for cf.proto.Shiva 2.2 would be acceptable, as it hoovered there in 2006-2008 (source: The 2000 Club). That means that -280 would be even a better pick in my opinion. Ofcourse that would not be ideal for every bot, but I think that setting the border for The 2000 Club as it used to be (long time ago) is a good compromise.

Aargh, forget what I said. This approximate ELO is direct related to APS. Still have the old ELO in my head. My remark about the border of The 2000 Club still stands though.

Personally, I don't consider changes to APS to be "drift", but just valid changes to overall score based on the rumble population. There is no such thing as an absolute rating, even with a (theoretical) perfectly designed ELO. To me, rating drift is a change in rating when the pair wise scores have not changed.

I haven't examined the data closely (4th day without power here!), but fwiw Dookious maxed at about 2130 on the old server and Komarious was almost exactly 2000.

You make a good argument, it isn't drift per say. I suppose we could go with this version of the AELO. Unlike APS its numbers are more `memorable`. Mostly because their larger, but also because of the logarithmic scaling at the extremes.

So instead of saying i'm ranked 90.2 vs 90. It is 2244 vs 2237. Besides, if you hit 100% you get to say you hit infinity.

There is a way to make an "absolute" rating in ELO, using an anchor competitor.

Choose one competitor, preferably a competitor which is never re-submitted, and define arbitrarily which rating it has. Then, modify rating updates so this anchor competitor rating never changes. All other competitors ratings will adjust in relation to the anchor competitor, instead of in relation to newer competitors added to the ranking.

I saw it been done in CGOS ranking.

But simply centering everyone around 1600 (ELO) or 50% (APS) is good enough for me.

I think that's a good idea, and is something David Alves had slated for roborumble.org =), but everyone else's ratings would still change as the population changes, just as APS does. I was just saying that is unavoidable, and not an anomalous side-effect of some weird scoring formula, like ELO rating drift.

Avoiding ratings drifts due to population increase is hard to workaround, and the anchor competitor is the only way I know.

But avoiding ratings drifts due to re-submits (everyone´s rating going down) can be avoided by centering everyone around a constant number, like 1600, or around a single anchor competitor.

If I had to pick an anchor, I would go with something like SandboxDT, RaikoMX or some other classic robot.