Weird rumble scores

I've noticed that BeepBoop has been dropping in APS in the rumble lately. 0.14 should be a bit stronger than 0.13 and gets around 91.5 APS when I run a season against all rumble participants myself, but in the rumble it's at 91.2 APS and is quite a bit worse than 0.13. Does anyone have ideas about what could be causing the difference? The drop seems consistent rather than against a few problem bots, so the only thing I can think of is that it's skipping turns on some rumble clients but not when I run it myself.

I’m experiencing similar thing. Score is constantly dropping as more battles come.

But is’s not hard to explain.

1. For bots you get 100%, when getting more battles, it’s either changing to 100% or less than 100%, and the more battles the higher probability it gets less than 100%, so score can only decrease as more battles come.

2. For bots saving data, it’s only getting better the more data they have.

Since this affected a lot of bots, affecting 0.1~0.2 APS is quite common.

Worth mention that 1 APS decrease in 10 bots is enough to give you -0.1 APS.

Btw, 1 season is generally not enough to get stable results. For reliable result, 50000 or even 100000 battles are needed. Anyway comparing after ~30000 battles is generally acceptable.

2 would explain a bit of a score decrease, although not that many bots save data. I don't think 1 is true; sure some 100%s will become 99.5%s but also some 99%s will move up to 99.5%. Without 2, the APS estimated from a small number of battles should be an unbiased estimate of the true APS. And it takes 1 APS decrease in 100 bots to get -0.1 APS since there are ~1000 bots in the rumble!

Have a look at 0.14 and 0.14a, the APS is 0.3 diff. And if only looking at the simplest bots to beat, 0.14a is having more 100% than 0.14, simply because it haven’t got enough battles.

99% going to 99.5% does happen, but they do move to 98.5% as well. It’s mostly balanced.

But the 100% part is onesided, causing it to constantly move down before reaching some “stable point”.

0-1 is balanced, but since you don’t get 0, it’s unbalanced then. Averaging on unbalanced distribution is biased sometimes, there exists better calc of APS to avoid score shifting, e.g. Using walson score lower bound for each bot instead of some 40 window moving average.

I have noticed some weird outlier scores myself, and I don't think it's just those factors Xor.

The two battles of ags.Glacier 0.3.0 versus lxx.Emerald 0.6.5 that ran had an average APS of 26.04 for Glacier, yet when I ran those two bots manually on the same computer I had a rumble client on, I couldn't reproduce anything remotely that close to a score that low for Glacier, it was consistently >60 no matter how many times I ran it, usually >70. That's a massive discrepancy really, even with so few data points.

At the time the only rumble client besides my own on the same computer I couldn't reproduce an issue on, was "Xor_Sily". There's a chance it was just getting massively unlucky, but I'm rather curious what the result of manually running some ags.Glacier 0.3.0 versus lxx.Emerald 0.6.5 battles on the Xor_Sily computer would be.

If you are recording data, I can send you the files.

Anyway I’ve been long suffering outliner results, back to the days when optimizing SimpleBot. Score drop is huge after more battles sometimes (and Beaming is running rumble as well that days), and is yes not reproducible.

And that’s why Scalar series is called “scalar”. Because I’ve been optimizing performance exclusively since then, mainly leveraging Scalar Replacement to reduce GC overhead. And nowadays I’m suffering from outliner scores much less.

So yes, sometimes there is some outliner score. And the fact is either about some bugs, or GC overhead being too much (resulting a lot of skipped turns)

I'm not recording any data at this time no.

GC overhead is one potential issue, but I would argue that if rumble clients are causing massive skipped turns due to GC overhead, then the rumble client is likely badly configured, with something like too many rumble clients running concurrently for the number of CPU cores.

Java runs GC taking advantage of an additional thread generally, and it's normal for GC overhead to be significant for many bots, to the degree where I would argue that a rumble client should always be allocated two unused CPU cores, one for the main thread and one for GC thread overhead.

Since rumble clients are uploading scores after a few battles, it’s really hard that every core is used already. Not to mention that modern CPUs are great at reordering instructions, making room for more threads. So that leaving 1 more core for idle is completely wasting time & money IMO.

Anyway if rumble clients are commonly experiencing GC overhead, it could be solved by forcing full GC to run before each round. And if you’re still producing too much garbage even between rounds, it’s totally fine to be punished by skipped turns.

Anyway, GC overhead is always fair. If you are dealing with GC and skipped turns worse than the opponents, you get worse score, it’s perfectly judged.

You do not have permission to edit this page, for the following reasons:

You can view and copy the source of this page.

Return to Thread:Talk:BeepBoop/Weird rumble scores/reply (6).

Being fair means no one is getting advantage over it. And the ability to withstand ocationally skipped turns is part of the competition.

Since no one can guarantee that robots are always running with sufficent resource, I’m always on the side that robot authors should assume low performance computers.

"no one is getting advantage over it" is certainly untrue. Consider the notion of a bot that uses very little CPU in the main thread but creates lots of GC overhead, in a battle versus a bot that uses most of the typical CPU allotment but doesn't create much GC overhead. If this is on a system where the GC thread can affect the time available to the main thread (i.e. don't have enough spare CPU for the GC thread), then the bot that uses very little CPU in the main thread but is creating lots of GC overhead will be advantaged by causing the GC overhead, since it would cause more skipped turns for the other bot but not so much itself. This is somewhat of an extreme example, but the point is that skipped turns caused by GC overhead are anything but fairly distributed.

You are right. Apart from creating many thrads that do a lot of work when it’s others turn, creating a lot of objects to increase GC overhead does affect others’ bots as well, making the result a little bit random.

But I doubt how much difference can GC overhead put. The most unreproducible scores I experienced are always coming from some rare exceptions, say 1/1000. And once happened, it causes some random pairing to be close to 0. If averaged with some normal score, it really looked like it’s decreasing with no reason.

But there’s always some reason, and mostly coming from specific bot instead of the clients, since not everyone is affected.

So my advice is that you output exceptions to file, and check if there are any. Skipped turns could also be counted. I was doing this in older bots as well, and concluded that GC overhead & skipped turns aren’t really the problem, but exceptions are.

For the specific case I mentioned of ags.Glacier 0.3.0 versus lxx.Emerald 0.6.5, when it was at 1 battle, I thought it was likely some rare exception as you say, but then a 2nd battle came in with about the same low score as the 1st battle, and yet I'm not able to reproduce a result anything like that in many many tests. This leads me to believe that there is most likely something significantly different about the environment those two battles were run in, as compared to my own environment.

I think you are right. Clients should be allocated with one extra core for GC to be ran on background. Forcing full GC each round could also be added to rumble client.

And I think the extra core should be enforced by rumble clients

Anyway the optimal cpu ratio needs some experiments, but battles seems to run even faster when some cores are dedicated for GC.

And I’m not sure whether rumble clients shall be restarted after many battles. If there were some memory leaks in robocode, performance will drop overtime, resulting APS drop in newer bots.

It's not per-round so much as per-battle, but I will note that Robocode does make some System.gc calls at end of battle.

It's also the case for a very long time I've been in a habit of including System.gc() in the constructor of my bots, so that'd end up being per round.

That can only be Xor_Sily, that is the only one that has run battles in after you uploaded version 0.15

Did you manually set the CPU constant in your robocode install ? Maybe Xor has to recalculate the CPU constant for Xor_Sily, if the machine it is running on has become heavier loaded.

The cpu constants of Xor_Sily is computed when all cores are used, and currently I’m using only half of the cores as suggested by Rednaxela. So maybe the cpu constant is a little bit loose, making skipped turns happen LESS. If a bot isn’t skipping turns in ordinary configuration, it shouldn’t skip turns on Xor_Sily.

If anyone is logging data, I could help by sending the files.

Isn't using half of the cores also half the performance, so taking twice the time, so ideally twice the cpu constant ?

I know the difference now, Xor_Sily has no turbo boost support, so cpu constant is accurate. On most computers, cpu constant is actually much loose, because actual battles are run with turbo boost, but cpu constant, not. This is making skipped turns happening LESS.

I'm not sure I follow: what you are saying suggests that skipped turns should happen more on Xor_Sily right? I assume the reason for BeepBoop's low scores is it skipping lots of turns.

As an aside, I've also noticed that DrussGT 3.1.7 has also dropped 0.3 APS compared to 3.1.6, maybe it is also getting bad battles with lots of skipped turns?

I mean if you run battles on computers without turbo boost, you should get identical results as Xor_Sily.

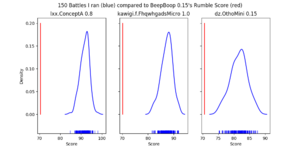

Hmmm I'm still not able to reproduce the low-scoring battles. First of all, turning off turbo boost does change the CPU constant for me (it's ~4e6 with turbo boost on and ~5e6 with turbo boost off, I've recomputed the constant both with/without multiple times and it seems reasonably consistent). But even if I use the 4e6 CPU constant with turbo boost turned off, I am getting essentially the same results as in the image I uploaded. What CPU constant does Xor_Sily use? Maybe as a short-term fix you could add BeepBoop to its roborumble.txt EXCLUDE?

I stopped Xor_Sily. It's some high performance server that costs $40 a week. Maybe we need some test set to verify rumble clients before entry, making it easier to serve a client.

Btw, could you re-submit a version and run rumbles on your machine, and see if we can get the correct result now?

I think the problem is that Xor_Sily is running 7/24, for months. If memory leak happens, GC will get worse and worse. Maybe I should add some auto restart script later and try again.

You can set the max iterations in the client and run it in a bash loop.

What sort of high performance server is this? If it's virtualized rather than true dedicated, it wouldn't surprise me if the exact amount of available CPU varies dramatically from moment-to-moment even if the provider is guaranteeing some number of cores worth of overall performance.

Could be interesting to some time make a tool for measuring the stability of available CPU, based on running rapidly running a series of identical micro-benchmarks and looking at the variations in how long it takes.

Looks like the weird battles are produced on Xor_Sily. I will run some battles using these bots manually on Xor_Sily, to see what's happening.

Btw I'm not experiencing weird results personally, battles ran in local have similar results in rumble. How many cores are u using when running local battles?

Stopping Xor_Sily seems to have fixed things, with BeepBoop's scores finally matching what happens when I run a season myself! I also did some profiling to make sure BeepBoop isn't a SlowBot. On average it takes <80% of the time per tick of DrussGT and <50% of Diamond. For the 99.9th percentile of slowest ticks it is slightly (<10%) slower than DrussGT and slightly faster than Diamond, so I don't think it should be skipping turns any more often than them.