Thread history

| Time | User | Activity | Comment |

|---|---|---|---|

| No results | |||

Running them all at the same time could make sense, but I would suggest being careful if you do that, because the order that they get run in may matter. Even when running them one at a time in sequence, I've recall noticing that the order in which they are run could very slightly impact the apparent performance, I suspect due to caching, JIT, and/or garbage collection characteristics. It's been a while, but IIRC the System.gc() call I have in there between running different trees was to lessen that effect. It may make sense to add some form of randomization to sequence they're run in.

Cache performance is one of those things that's tricky with Robocode, because your robot is also sharing the CPU with another bot which could be doing who knows that with it's memory accesses. For that reason I wouldn't trust optimizations for better caching behavior to necessarily pan out in practice with bots. I may be wrong about that though.

One could put it in a reference bot yeah, though the tests would be far slower and less consistent, thus requiring a much greater number of test iterations to have a reliable result.

Oh, and in any case, nice job with making a tree that much faster with the large datasets :)

I wrote a quick benchmark and threw it in my main method. Some tweaks and improvements (putting the distance functions in methods, using a binary search for the results array) have given me big improvements at lower tree sizes:

Config: No JIT Warmup Tested on random data. Training and testing points shared across iterations. Searches interleaved. Num points: 20000 Num searches: 200 Dimensions: 12 Num Neighbours: 40 Accuracy: 100% Iteration: 1/3 This tree add avg: 3092 ns Reds tree add avg: 2216 ns This tree knn avg: 809965 ns Reds tree knn avg: 1380366 ns This tree knn max: 10844097 ns Reds tree knn max: 11005183 ns Accuracy: 100% Iteration: 2/3 This tree add avg: 1259 ns Reds tree add avg: 846 ns This tree knn avg: 643037 ns Reds tree knn avg: 1119268 ns This tree knn max: 979013 ns Reds tree knn max: 1787566 ns Accuracy: 100% Iteration: 3/3 This tree add avg: 1146 ns Reds tree add avg: 800 ns This tree knn avg: 641163 ns Reds tree knn avg: 1099657 ns This tree knn max: 1318587 ns Reds tree knn max: 1782212 ns

Note, I hacked the RedGen2 tree to not check for NaN in the distance functions so that they are on equal footing.

Ahh nice. Out of curiosity, any reason you're comparing against my 2nd gen tree? My 3rd gen tree was a bit faster at least in the tests I did.

I don't have it installed yet, and I thought I'll do a proper bench when I add my tree to the whole bench framework. Do you still have the code that does those nice charts tracking the trees through time? Is that what's in the KNN.jar? Yes it is! Great.

Ah, looks like KNN.jar that's uploaded on the wiki isn't quite 100% up-to-date. A couple days after the KNN.jar uploaded to the wiki was last updated I made a few minor changes. See here for the latest source [1]

You got that just in time, your latest code is now in!

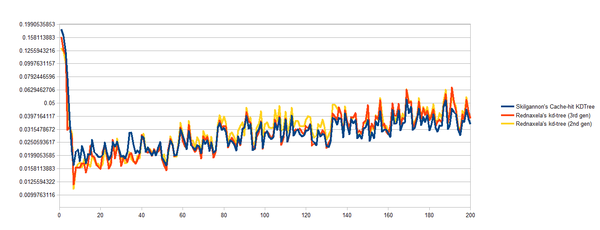

So on the vanilla Diamond-gun data benchmark it seems to be pretty much tied with your Gen2 and Gen3, changing places from run to run. This is what I saw mostly though, your Gen2 slightly above Gen3, and my cache-hit tree in the middle:

BEST RESULT: - #1 Rednaxela's kd-tree (2nd gen) [0.0317] - #2 Skilgannon's Cache-hit KDTree [0.0340] - #3 Rednaxela's kd-tree (3rd gen) [0.0356] - #4 Voidious' Linear search [0.4175]

So, I wrote some code which instantiates, does operations on, then destroys an array of 1k doubles and 1k ints, and makes it a dependency of a later println. This was the result:

RESULT << k-nearest neighbours search with Voidious' Linear search >> : Average searching time = 0.4198 miliseconds : Average worst searching time = 14.7468 miliseconds : Average adding time = 1.6292 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Rednaxela's kd-tree (2nd gen) >> : Average searching time = 0.0333 miliseconds : Average worst searching time = 1.7135 miliseconds : Average adding time = 1.7662 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Skilgannon's Cache-hit KDTree >> : Average searching time = 0.0312 miliseconds : Average worst searching time = 1.3695 miliseconds : Average adding time = 2.7642 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Rednaxela's kd-tree (3rd gen) >> : Average searching time = 0.0317 miliseconds : Average worst searching time = 1.2998 miliseconds : Average adding time = 2.1558 microseconds : Accuracy = 100.0% BEST RESULT: - #1 Skilgannon's Cache-hit KDTree [0.0312] - #2 Rednaxela's kd-tree (3rd gen) [0.0317] - #3 Rednaxela's kd-tree (2nd gen) [0.0333] - #4 Voidious' Linear search [0.4198]

It now comes first every single time I run. So it seems my cache-hit design has paid off (slightly) =)