Thread history

| Time | User | Activity | Comment |

|---|---|---|---|

| No results | |||

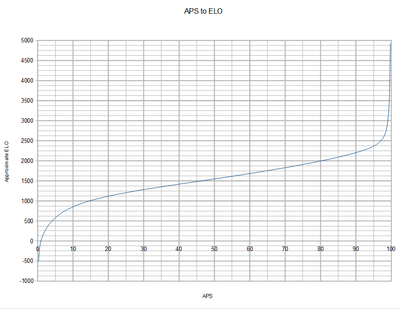

Ah good idea, how about this? It is a real mess at the moment. But it seems to work, and is a bit more accurate in my opinion in placing certain bots where they belong. It also has negative and positive infinity for 0 and 100.

public static final double calculateApproxElo(double x) {

double a = 0.0007082;

double b = -0.00000334;

double c = 0.003992;

double d = -124.3;

double e = 647.4;

double f = -72.5;

return 1.0/(a+b*x+c/x) + d/x + e/(100-x) + f;

}

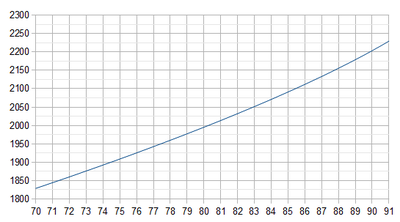

That is, Diamond and DrussGT are in the 2200 (only just), Phoenix up are in the 2100, Help and up are 2000. The 1900's start around Raiko/Lacrimas.

If you want to enforce a specific rating for a specific competitor, you can also adjust the DESIRED_AVERAGE in the algorithm above so ratings match what you want.

If you change the rating difference between competitors, you distort expected score estimation, PBI and specialization indexes. It is no longer ELO-like.

That´s why the code I put above shifts all competitors up or down equally.

Looks pretty good. Any chance you could show that first graph overlayed with a scatter plot of the training samples you used?

The first one was generated (calcelofast). I used all samples from 2009 rumble archive aps and glinko-2.

From there it was hand tuned to be honest.

Wow, ok. It would only be a couple lines of Matlab/Scilab to do a proper regression though. Wouldn't that be better?