View source for Talk:BeepBoop

- [View source↑]

- [History↑]

Contents

| Thread title | Replies | Last modified |

|---|---|---|

| Weird rumble scores | 39 | 20:36, 16 July 2021 |

| Congratulations on 100% PWin! | 12 | 03:25, 24 June 2021 |

| Energy Management & Firepower Selection | 2 | 22:49, 23 June 2021 |

| Awesome enty | 23 | 17:57, 23 June 2021 |

I've noticed that BeepBoop has been dropping in APS in the rumble lately. 0.14 should be a bit stronger than 0.13 and gets around 91.5 APS when I run a season against all rumble participants myself, but in the rumble it's at 91.2 APS and is quite a bit worse than 0.13. Does anyone have ideas about what could be causing the difference? The drop seems consistent rather than against a few problem bots, so the only thing I can think of is that it's skipping turns on some rumble clients but not when I run it myself.

I’m experiencing similar thing. Score is constantly dropping as more battles come.

But is’s not hard to explain.

1. For bots you get 100%, when getting more battles, it’s either changing to 100% or less than 100%, and the more battles the higher probability it gets less than 100%, so score can only decrease as more battles come.

2. For bots saving data, it’s only getting better the more data they have.

Since this affected a lot of bots, affecting 0.1~0.2 APS is quite common.

Worth mention that 1 APS decrease in 10 bots is enough to give you -0.1 APS.

Btw, 1 season is generally not enough to get stable results. For reliable result, 50000 or even 100000 battles are needed. Anyway comparing after ~30000 battles is generally acceptable.

2 would explain a bit of a score decrease, although not that many bots save data. I don't think 1 is true; sure some 100%s will become 99.5%s but also some 99%s will move up to 99.5%. Without 2, the APS estimated from a small number of battles should be an unbiased estimate of the true APS. And it takes 1 APS decrease in 100 bots to get -0.1 APS since there are ~1000 bots in the rumble!

Have a look at 0.14 and 0.14a, the APS is 0.3 diff. And if only looking at the simplest bots to beat, 0.14a is having more 100% than 0.14, simply because it haven’t got enough battles.

99% going to 99.5% does happen, but they do move to 98.5% as well. It’s mostly balanced.

But the 100% part is onesided, causing it to constantly move down before reaching some “stable point”.

0-1 is balanced, but since you don’t get 0, it’s unbalanced then. Averaging on unbalanced distribution is biased sometimes, there exists better calc of APS to avoid score shifting, e.g. Using walson score lower bound for each bot instead of some 40 window moving average.

I have noticed some weird outlier scores myself, and I don't think it's just those factors Xor.

The two battles of ags.Glacier 0.3.0 versus lxx.Emerald 0.6.5 that ran had an average APS of 26.04 for Glacier, yet when I ran those two bots manually on the same computer I had a rumble client on, I couldn't reproduce anything remotely that close to a score that low for Glacier, it was consistently >60 no matter how many times I ran it, usually >70. That's a massive discrepancy really, even with so few data points.

At the time the only rumble client besides my own on the same computer I couldn't reproduce an issue on, was "Xor_Sily". There's a chance it was just getting massively unlucky, but I'm rather curious what the result of manually running some ags.Glacier 0.3.0 versus lxx.Emerald 0.6.5 battles on the Xor_Sily computer would be.

If you are recording data, I can send you the files.

Anyway I’ve been long suffering outliner results, back to the days when optimizing SimpleBot. Score drop is huge after more battles sometimes (and Beaming is running rumble as well that days), and is yes not reproducible.

And that’s why Scalar series is called “scalar”. Because I’ve been optimizing performance exclusively since then, mainly leveraging Scalar Replacement to reduce GC overhead. And nowadays I’m suffering from outliner scores much less.

So yes, sometimes there is some outliner score. And the fact is either about some bugs, or GC overhead being too much (resulting a lot of skipped turns)

I'm not recording any data at this time no.

GC overhead is one potential issue, but I would argue that if rumble clients are causing massive skipped turns due to GC overhead, then the rumble client is likely badly configured, with something like too many rumble clients running concurrently for the number of CPU cores.

Java runs GC taking advantage of an additional thread generally, and it's normal for GC overhead to be significant for many bots, to the degree where I would argue that a rumble client should always be allocated two unused CPU cores, one for the main thread and one for GC thread overhead.

Since rumble clients are uploading scores after a few battles, it’s really hard that every core is used already. Not to mention that modern CPUs are great at reordering instructions, making room for more threads. So that leaving 1 more core for idle is completely wasting time & money IMO.

Anyway if rumble clients are commonly experiencing GC overhead, it could be solved by forcing full GC to run before each round. And if you’re still producing too much garbage even between rounds, it’s totally fine to be punished by skipped turns.

Anyway, GC overhead is always fair. If you are dealing with GC and skipped turns worse than the opponents, you get worse score, it’s perfectly judged.

I'm curious why you think GC overhead is always fair. Since GC overhead happens outside of the main thread, it can punish all robots in the same battle with a high degree of randomness. Not only that, if you're running a bunch of rumble clients on a computer, and the overall CPU usage on the system for all cores reaches 100% due to a couple clients having more GC overhead, then it could affect all bots in all active battles on that system, even in the other rumble clients.

I think you are right. Clients should be allocated with one extra core for GC to be ran on background. Forcing full GC each round could also be added to rumble client.

And I think the extra core should be enforced by rumble clients

Anyway the optimal cpu ratio needs some experiments, but battles seems to run even faster when some cores are dedicated for GC.

And I’m not sure whether rumble clients shall be restarted after many battles. If there were some memory leaks in robocode, performance will drop overtime, resulting APS drop in newer bots.

It's not per-round so much as per-battle, but I will note that Robocode does make some System.gc calls at end of battle.

It's also the case for a very long time I've been in a habit of including System.gc() in the constructor of my bots, so that'd end up being per round.

That can only be Xor_Sily, that is the only one that has run battles in after you uploaded version 0.15

Did you manually set the CPU constant in your robocode install ? Maybe Xor has to recalculate the CPU constant for Xor_Sily, if the machine it is running on has become heavier loaded.

The cpu constants of Xor_Sily is computed when all cores are used, and currently I’m using only half of the cores as suggested by Rednaxela. So maybe the cpu constant is a little bit loose, making skipped turns happen LESS. If a bot isn’t skipping turns in ordinary configuration, it shouldn’t skip turns on Xor_Sily.

If anyone is logging data, I could help by sending the files.

Isn't using half of the cores also half the performance, so taking twice the time, so ideally twice the cpu constant ?

I know the difference now, Xor_Sily has no turbo boost support, so cpu constant is accurate. On most computers, cpu constant is actually much loose, because actual battles are run with turbo boost, but cpu constant, not. This is making skipped turns happening LESS.

I'm not sure I follow: what you are saying suggests that skipped turns should happen more on Xor_Sily right? I assume the reason for BeepBoop's low scores is it skipping lots of turns.

Looks like the weird battles are produced on Xor_Sily. I will run some battles using these bots manually on Xor_Sily, to see what's happening.

Btw I'm not experiencing weird results personally, battles ran in local have similar results in rumble. How many cores are u using when running local battles?

Stopping Xor_Sily seems to have fixed things, with BeepBoop's scores finally matching what happens when I run a season myself! I also did some profiling to make sure BeepBoop isn't a SlowBot. On average it takes <80% of the time per tick of DrussGT and <50% of Diamond. For the 99.9th percentile of slowest ticks it is slightly (<10%) slower than DrussGT and slightly faster than Diamond, so I don't think it should be skipping turns any more often than them.

I’ve been long wondering where the bar of top bots are, now BeepBoop proved that there’s still much to do here! Congratulations on the surprising result! May be I could start a new bot as well soon!

For me it is not the 100% win, others achieved that too over time. It is the very dominant win, only 2 bots score better than 40%, and the gigantic survival, only 1 bot survives 30%. I have experimented with bulletpower, but never managed to get a real advantage with it. It seems however that the aggressive conservative way (whats in a name) of BeepBoop does pay off.

Yeah, after this one I will do an experimental release with more standard energy management to see how much BeepBoop's is making a difference.

My experience is that everything is dependent, score is some "multiplication" of everything. With top class guns and top class wave surfing, tuning energy management gives a lot gain when it isn't done right, so does tuning bandwidth, bullet shadow, etc. But with different guns, and different wave surfing algorithms, the optimal of everything else is different, so past tuning gets outdated very soon.

Anyway, the most gain always come from: 1. Fixing some significant weakness 2. Adding some big feature, e.g. Wave Surfing 3. Getting everything in 1 and 2 right.

Aye! Nicely done Kev! Seeing some of this sort of activity and similar kinda makes me feel tempted to come back to Robocode some time :)

Thanks everyone :). My theory is that "optimal" bots would be so good at dodging that there's nothing better than random targeting (taking into account walls, game physics, etc) against them. Certainly as bots get better hit rates go down, but I think there's a long way to go before bots are at that point.

DrussGT has a random gun as backup just in case some optimal bot does get invented ;) Unfortunately, it seems it has a weakness in the bullet power selection. I'll have to take a look at that...

Looking at DrussGT's virtual gun scores, the random gun does surprisingly close to the other ones against top surfers! The learned weights for BeepBoop's anti-surfer gun (1) put a lot of emphasis on wall features, (2) put basically no weight on historical features like time since decel, and (3) puts basically no weight on recency of the wave. This makes me wonder if BeepBoop's anti-surfer gun mostly acts as a slightly better random gun that is better at handling walls rather than something that learns patterns in the other bot's movement.

Well, DrussGT's random gun does take maximum reachable angles into account, which is probably 80% of wall effects. However there's probably something there about bots being hesitant to get closer to the enemy, even if they could potentially reach a further angle, which skews the distribution away from the reachable angles which are affected by walls. It would be interesting to do something with this hypothesis, but for me the random gun is really there as an emergency backup against someone simulating DrussGT's gun or something similar.

I had some strange experiment result. My guns are constantly doing worse than random against top surfers (oh no), but whenever I switch to some real random gun, it only decreased my score. Maybe a learning gun gives better bullet shadow? There's little study how guns affects bullet shadowing since its passive. Maybe adding some "active" thing to random gun helps.

As long as both bots are using the same firepower, bullet collisions don't help you much: you don't get hit, but also you don't hit the opponent! So I thought a bit about adding active bullet shadowing to BeepBoop, but decided it wassn't worth the effort. I guess it could still help you situationally if for the moment your chance of hitting is lower than the opponent's. For example, if you are stuck near a wall, maybe you could create a shadow to cover your escape from the wall, but it would be complicated. Also for this reason, maybe hit rate should really be measured as hits / (shots - collisions), although getting this statistic for virtual guns isn't possible. If you are measuring hit rate as hits / shots, it could look like your random gun is better when really it just isn't creating as many bullet collisions.

And I guess BeepBoop's AS gun works pretty well not because its some better random, but rather most surfers have significant weakness near walls. They generally weight distance very high to prevent getting too close (future risk), but essentially making them weak at dodging bullets near this scenario. Some repeated patterns exist because they learned the hit but still get to the same position.

You know, your description of using score estimation based firepower selection, reminded me that back in 2010-2011 I was working on a thing akin to that for Midboss. I'd commented on it some I'm sure, but don't think I ever described it in great deal, nor shared the code. I find it interesting to compare what I built back then to what BeepBoop is doing.

Posted it here now for interest's sake: Midboss/Score-Estimation_based_Firepower_Selection

It had some score estimation formulas that were pretty similar to BeepBoop's.... but one key difference made is far far slower. It performed that score estimation formula absurdly many times per tick. Rather than be content with some continuous-time estimate based on average rate of damage, it did brute force prediction of discrete future waves, all the permutations of hits/misses, for up to 30 waves into the future (though with caching to effectively re-join alike branches, since 2^30 would get silly), only performing the sort of score estimation BeepBoop does at a depth or minimum probability limit.

I'm not sure how much extra going for simulating discrete branching possibilities with discrete waves gained me, but the idea was that it this would give it some more potential for interesting emergent 'cleverness', such as around the precise timing of things or amount of energy left for firing toward the very very end of the round. Boy did it chew up CPU though.

The fact that BeepBoop is using a form of score estimation in it's firepower selection, tempts me to some day go back to try to refine what I had started back then, so thanks :-)

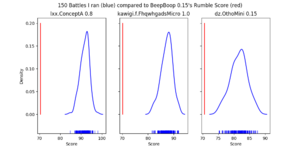

That's really cool, I didn't see that! I also built a bullet power simulator that took into account discrete firing, bullet flight time, etc. However, it didn't use tree search: I just did monte-carlo rollouts of running it a couple hundred times and averaging the results, so it's probably much slower than yours! It's not used in BeepBoop, but I did use it to validate that BeepBoop's fast estimates assuming continuous time, normal distribution for hitrate, etc. were about right. For example, here and here are some plots showing that BeepBoop's approximations work pretty well, although not perfectly and with some edge cases (it says file uploads are disabled so I can't add them to the wiki). I sometimes see interesting emergent behavior from BeeBoop like firing high-power bullets when it's losing, presumably either to get more bullet damage and take less bullet damage before it dies or in the hope that a lucky high-power hit turns things around.

I think I got uploading working... give it a try and let me know.

This new bot of yours really is awesome ! It is really beating the hell out of the topbots, even without BulletShielding.

Alas I am not able to run any battles for it, as I am still on Java 8.

alas, in version 0.11, still some parts are not Java 8 compatible: kc/mega/game/Battleffield has been compiled by version 57.0.

Does not matter that much, I am just not able (currently) to run any battles for it. Same for Raven as it has been compiled by version 55.0.

I've downloaded Java 13, I can now run battles for BeepBoop. After rebuilding the robot-database, also Raven and WaveShark run fine. Note that for my development I will still use the compiler option '-source 1.8'

Oh wow, missed this! Awesome work Kev, you have a history of popping up with surprise entries =)

I'd be curious to know more about the Tensorflow work you did to make the KNN features...

Thanks! I wrote a brief description under BeepBoop/Understanding_BeepBoop, but I'll release the code too once I get it cleaned up.

Aha, I missed the last section. Surprised there wasn't more to gain from some kind of deeper embedding model.

Me too, and I'll maybe revisit it at some point. Theoretically a deeper embedding model could learn feature interactions like "wall-ahead is more important when velocity is 8 than when it is 0"

I’m surprised as well. Btw, how many layers are you using in the deeper model? And is that fully connected? I guess some deeper models with explicit feature interactions may work better in robocode scenario, given high noise. I would try things like Deep&Cross, DeepFM, etc.

It's possible that the KNN already takes that into account sufficiently. Maybe if you bump the cluster size up a lot, and change the kernel width for cluster weighting, it might force this part of the learning into the NN instead?

You do not have permission to edit this page, for the following reasons:

You can view and copy the source of this page.

Return to Thread:Talk:BeepBoop/Awesome enty/reply (11).