User talk:Chase-san/Kd-Tree

NullPointerExceptions and inaccuracy

Trying to test your Kd-Tree in the benchmark framework, I'm getting some NullPointerExceptions due to right.rect.getNearest(k) returning null sometimes. I don't know if it's the proper fix, I changed the relevant lines to be like:

PointKD p = right.rect.getNearest(k);

if(p != null && k.distanceSq(p) < t) {Something else is wrong also I believe, because it's only getting "88% accuracy" out of the benchmark results, so it's not finding all of the nearest neighbors correctly. --Rednaxela 22:23, 1 March 2010 (UTC)

- That should only happen if the rectangle hasn't gotten expanded ever, which shouldn't happen, unless you haven't added anything to the tree at all (darn me and removing all my safeties). Since this gets set during the add. Can you give me some idea of the data you are feeding into it? --Chase 22:44, 1 March 2010 (UTC)

- Well, it's adding to the tree before it's searching. See, File:KNN.jar along with Diamond vs CunobelinDC gun data, a recording I made of every kd-tree operation performed by an older version of Voidious's Diamond during a battle. I can upload an updated KNN.jar that includes the testing of your tree if you wish. Personally I've found the framework invaluable for testing my tree, making it easy to see when something I just did added a bug. --Rednaxela 22:55, 1 March 2010 (UTC)

- I see, I got it running, and I get the error too. Let me see whats going on. --Chase 23:25, 1 March 2010 (UTC)

- Well I found parts of the problem, it gets 100% now, but its about pretty slow (not as slow as Linear search), I suppose that is to be expected, seeing how hard it is on the GC. Still not sure why Rect is returning null, since it shouldn't (probably something stupid per usual). Well I based this new one off my old one, maybe I should write one from scratch. :P --Chase 00:02, 2 March 2010 (UTC)

- Well, it's adding to the tree before it's searching. See, File:KNN.jar along with Diamond vs CunobelinDC gun data, a recording I made of every kd-tree operation performed by an older version of Voidious's Diamond during a battle. I can upload an updated KNN.jar that includes the testing of your tree if you wish. Personally I've found the framework invaluable for testing my tree, making it easy to see when something I just did added a bug. --Rednaxela 22:55, 1 March 2010 (UTC)

K-NEAREST NEIGHBOURS ALGORITHMS BENCHMARK ----------------------------------------- Reading data from data.csv Read 25621 saves and 10300 searches. Running 10 repetition(s) for k-nearest neighbours searching: :: 13 dimension(s); 40 neighbour(s) Warming up the JIT with 5 repetitions first... Running tests... COMPLETED. RESULT << k-nearest neighbours search with Voidious' Linear search >> : Average searching time = 2.007 miliseconds : Average worst searching time = 37.627 miliseconds : Average adding time = 3.96 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Chase-san's Bucket PR k-d tree >> : Average searching time = 1.023 miliseconds : Average worst searching time = 29.937 miliseconds : Average adding time = 8.38 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Simonton's Bucket PR k-d tree >> : Average searching time = 0.493 miliseconds : Average worst searching time = 45.444 miliseconds : Average adding time = 7.15 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Voidious' Bucket PR k-d tree >> : Average searching time = 0.836 miliseconds : Average worst searching time = 35.729 miliseconds : Average adding time = 6.39 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Rednaxela's Bucket kd-tree >> : Average searching time = 0.152 miliseconds : Average worst searching time = 38.736 miliseconds : Average adding time = 7.43 microseconds : Accuracy = 100% BEST RESULT: - #1 Rednaxela's Bucket kd-tree [0.1518] - #2 Simonton's Bucket PR k-d tree [0.4928] - #3 Voidious' Bucket PR k-d tree [0.8362] - #4 Chase-san's Bucket PR k-d tree [1.0227] - #5 Voidious' Linear search [2.0067] Benchmark running time: 493.49 seconds

Some results... All I can say is.. this current implementation, the worst search time is the lowest of all of them (though not by a ton, I suppose). But I wonder why that is. --Chase 00:29, 2 March 2010 (UTC)

RESULT << k-nearest neighbours search with Chase-san's Bucket PR k-d tree >> : Average searching time = 0.649 miliseconds : Average worst searching time = 34.114 miliseconds : Average adding time = 8.92 microseconds : Accuracy = 10

It seems Caching the distance cut the time by about 40%. (other times are close to what they are above). Oh well this is probably my last post on the wiki tonight. --Chase 00:45, 2 March 2010 (UTC)

As far as "I wonder why that is", I would say a variety of factors are involved. Firstly, I'd say the way you use comparators slows things down, due to the calling overhead. Secondly, a default bucket size of 200 seems odd; I've found that the optimal bucket size is more in the area of 24 to 32, depending on the specifics of the tree. With those things changed, as well as some other optimization details around the place, I'd guess the speed could get to would be around that of Duyn's tree perhaps, at least as fast as Simonton's tree. To go a step further, one could replace the Dequeue with a heap, in order to avoid full sorting overhead on points that will not be part of the final set of returned points. Anyway, not a bad job though. It does beat Voidious' tree so far after all which is a well enough made tree. :) --Rednaxela 01:15, 2 March 2010 (UTC)

- Here is the low down. It seems what was causing the problem with the null was the fact of a bucket getting points with exactly the same value on a certain dimension, meanin that all the points were placed to one side or the other (in this case the left) of that dimension, and the other side got nothing (poor child). The fact is the rectangles were only defined with something was added to the child, and I assumed since I divided the domain perfectly in half, this error was impossible to get (as I mentioned in my first bit there).

That should only happen if the rectangle hasn't gotten expanded ever, which shouldn't happen, unless you haven't added anything to the tree at all

I had pretty much answered my own question, but was too dense to realize it. however I got tired of poking at my old tree (preformance just kept getting worse), so I made a cut down version with a ShiftArray in it (basically array copy stuff back along the array and just insert the item in place, which is what my prioritydeque pretty much did anyway, but this does it with much less code). I got rid of the whole PointKD, RectangleKD functions, now I have just a thing that takes double[], I added a value clause, so it is just not a key-tree or set, anymore. I am running 10 iterations with KNN to get a benchmark, I will post those when they finish (on another computer). --Chase 03:38, 2 March 2010 (UTC)

K-NEAREST NEIGHBOURS ALGORITHMS BENCHMARK ----------------------------------------- Reading data from data.csv Read 25621 saves and 10300 searches. Running 10 repetition(s) for k-nearest neighbours searching: :: 13 dimension(s); 40 neighbour(s) Warming up the JIT with 5 repetitions first... Running tests... COMPLETED. RESULT << k-nearest neighbours search with Voidious' Linear search >> : Average searching time = 1.873 miliseconds : Average worst searching time = 34.274 miliseconds : Average adding time = 3.42 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Chase-san's Bucket PR k-d tree >> : Average searching time = 0.532 miliseconds : Average worst searching time = 24.898 miliseconds : Average adding time = 7.76 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Chase's Hyper Bucket KD-Tree >> : Average searching time = 0.433 miliseconds : Average worst searching time = 24.474 miliseconds : Average adding time = 6.82 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Simonton's Bucket PR k-d tree >> : Average searching time = 0.437 miliseconds : Average worst searching time = 38.07 miliseconds : Average adding time = 6.36 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Voidious' Bucket PR k-d tree >> : Average searching time = 0.763 miliseconds : Average worst searching time = 31.013 miliseconds : Average adding time = 5.62 microseconds : Accuracy = 100% RESULT << k-nearest neighbours search with Rednaxela's Bucket kd-tree >> : Average searching time = 0.138 miliseconds : Average worst searching time = 36.875 miliseconds : Average adding time = 6.69 microseconds : Accuracy = 100% BEST RESULT: - #1 Rednaxela's Bucket kd-tree [0.1375] - #2 Chase's Hyper Bucket KD-Tree [0.4326] - #3 Simonton's Bucket PR k-d tree [0.4372] - #4 Chase-san's Bucket PR k-d tree [0.5324] - #5 Voidious' Bucket PR k-d tree [0.7634] - #6 Voidious' Linear search [1.873] Benchmark running time: 463.63 seconds

I am not you Rednaxela, I think this is as far as I am going to get, I think I will post the hyper version though (it is smaller). --Chase 03:44, 2 March 2010 (UTC)

Oh yeah, am I the only one that has a Range function?

Pretty sure. At least, the only one with a Hyperrectangle style one. --Rednaxela 05:17, 2 March 2010 (UTC)

- [View source↑]

- [History↑]

Contents

| Thread title | Replies | Last modified |

|---|---|---|

| KDTreeF | 2 | 00:28, 10 June 2012 |

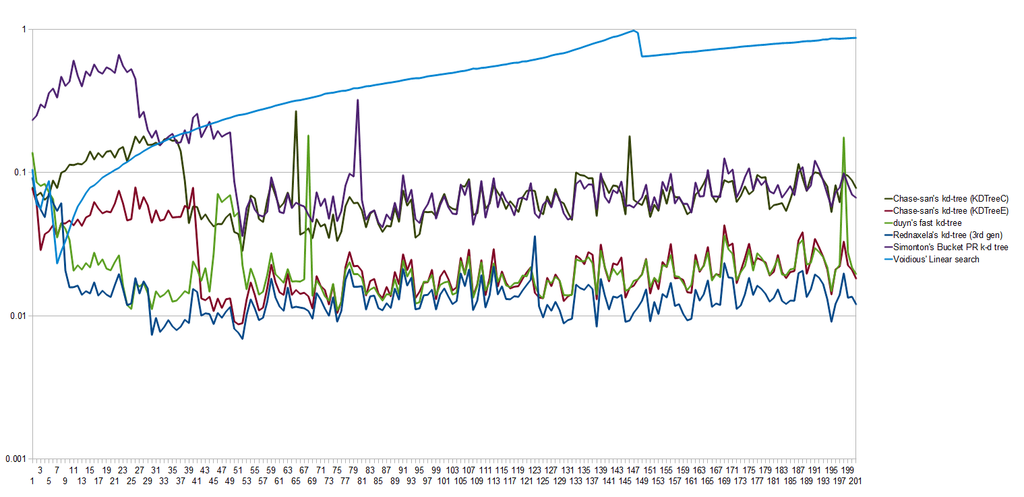

K-NEAREST NEIGHBOURS ALGORITHMS BENCHMARK ----------------------------------------- Reading data from gun-data-Diamond-vs-jk.mini.CunobelinDC 0.3.csv.gz Read 25621 saves and 10300 searches. Running 20 repetition(s) for k-nearest neighbours searching: :: 13 dimension(s); 10 neighbour(s) RESULT << k-nearest neighbours search with Chase-san's kd-tree (KDTreeC) >> : Average searching time = 0.0776 miliseconds : Average worst searching time = 11.1474 miliseconds : Average adding time = 1.5965 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Chase-san's kd-tree (KDTreeF) >> : Average searching time = 0.0259 miliseconds : Average worst searching time = 1.0691 miliseconds : Average adding time = 1.5182 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with duyn's basic kd-tree >> : Average searching time = 0.2016 miliseconds : Average worst searching time = 10.4197 miliseconds : Average adding time = 0.8725 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with duyn's fast kd-tree >> : Average searching time = 0.0245 miliseconds : Average worst searching time = 10.5265 miliseconds : Average adding time = 3.4632 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with duyn's optimised kd-tree >> : Average searching time = 0.0264 miliseconds : Average worst searching time = 13.8944 miliseconds : Average adding time = 3.4938 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Voidious' Linear search >> : Average searching time = 0.4874 miliseconds : Average worst searching time = 14.7098 miliseconds : Average adding time = 1.0922 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Nat's Bucket PR k-d tree >> : Average searching time = 0.2280 miliseconds : Average worst searching time = 188.3711 miliseconds : Average adding time = 27.4455 microseconds : Accuracy = 27.12% RESULT << k-nearest neighbours search with Rednaxela's kd-tree (2nd gen) >> : Average searching time = 0.0183 miliseconds : Average worst searching time = 1.8054 miliseconds : Average adding time = 1.8136 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Rednaxela's kd-tree (3rd gen, iterated) >> : Average searching time = 0.0183 miliseconds : Average worst searching time = 11.8064 miliseconds : Average adding time = 1.9969 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Rednaxela's kd-tree (3rd gen) >> : Average searching time = 0.0155 miliseconds : Average worst searching time = 2.7069 miliseconds : Average adding time = 2.4344 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Simonton's Bucket PR k-d tree >> : Average searching time = 0.1336 miliseconds : Average worst searching time = 12.6894 miliseconds : Average adding time = 4.1237 microseconds : Accuracy = 100.0% RESULT << k-nearest neighbours search with Voidious' Bucket PR k-d tree >> : Average searching time = 0.1115 miliseconds : Average worst searching time = 8.2543 miliseconds : Average adding time = 2.2128 microseconds : Accuracy = 100.0% BEST RESULT: - #1 Rednaxela's kd-tree (3rd gen) [0.0155] - #2 Rednaxela's kd-tree (2nd gen) [0.0183] - #3 Rednaxela's kd-tree (3rd gen, iterated) [0.0183] - #4 duyn's fast kd-tree [0.0245] - #5 Chase-san's kd-tree (KDTreeF) [0.0259] - #6 duyn's optimised kd-tree [0.0264] - #7 Chase-san's kd-tree (KDTreeC) [0.0776] - #8 Voidious' Bucket PR k-d tree [0.1115] - #9 Simonton's Bucket PR k-d tree [0.1336] - #10 duyn's basic kd-tree [0.2016] - #11 Nat's Bucket PR k-d tree [0.2280] - #12 Voidious' Linear search [0.4874] Benchmark running time: 450.15 seconds

Really managed to hammer down the worst average time. As well as 1/3rd the best time. Still not really on par with Rednaxela's tree though.

Nice stuff! With that graph, it looks like the place you have the most room to squeeze out more performance if you wish to, is by refactoring the code so that the JIT gets it optimized sooner. Having the lowest time in those first few searches is certainly neat, and perhaps is the most important thing in the robocode context as that's the most likely time for a kd-tree to lead to a skipped turn. I wonder what it took to get that down and how much that is dataset specific.

But on the inverse you have a smaller data set earlier on. So it might balance out to a point. But I do intend to work on that a bit. I actually originally tuned with a random data set.

Also the current tree is based off C but I removed the Item class and altered the return to use a heap. Though I was considering having it interface with SortedMap and just returning that interface. So that I could avoid the issue of defining an external class.

I am mulling around how to redesign my tree so that it works better with the JIT, and so I create/destroy fewer objects (which should save some time).